So before I get underway, this article is about Microchip PIC micro-controllers. Please understand – I don’t want to get into a flamewars with Atmel, MPS430 or other fanboys, my personal preference has been PIC’s for many years, thats a statement of personal preference, I am not saying that PIC’s are better than anything else I am just saying I like them better – please don’t waste your time trying to convince me otherwise, I have evaluated most other platforms numerous times already – so before you suggest I should look at your XYZ platform of choice, please save your time – the odds are good I have already done so and I am still using PIC’s

OK, full RANT mode enabled…

As I understand it Microchip are in the silicon chip business selling micro-controllers – actually Microchip make some really awesome parts and I am guessing here but I suspect they probably want to sell lots and lots of those awesome parts right? So why do they suppress their developer community with crippled compiler tool software unless you pay large $$$, after all, as a silicon maker they *NEED* to provide tools to make it viable for a developer community to use their parts? It is ridiculous charging for the tools – its not like you can buy Microchip tools and then use them for developing on other platforms so the value of these tools is entirely intrinsic to Microchip’s own value proposition. It might work if you have the whole market wrapped up but the micro-controller market is awash with other great parts and free un-crippled tools.

A real positive step forward for Microchip was with the introduction of MPLAB-X IDE – while not perfect its infinitely superior to the now discontinued MPLAB8 and older IDE’s which were, err, laughable by other comparable tools. The MPLAB-X IDE has a lot going for it, it runs on multiple platforms (Windows, Mac and Linux) and it mostly works very well. I have been a user of MPLAB-X from day one and while the migration was a bit of a pain and the earlier versions had a few odd quirks, every update of the IDE has just gotten better and better – I make software products in my day job so I know what it takes, and to the product manager(s) and team that developed the MPLAB-X IDE I salute you for a job well done.

Now of course the IDE alone is not enough, you also need a good compiler too – and for the Microchip parts there are now basically three compilers, XC8, XC16 and XC32. These compilers as I understand it are based on the HI-TECH PRO compilers that Microchip acquired when they bought HI-TECH in 2009. Since that acquisition they have been slowly consolidating the compilers and obsoleting the old MPLAB C compilers. Microchip getting these tools is a very good thing because they needed something better than they had – but they had to buy the Australian company HI-TECH Software to get them, it would appear they could not develop these themselves so acquiring them would be the logical thing to do. I can only speculate that the purchase of HI-TECH was most likely justified both internally and/or to investors, on the promise of generating incremental revenues from the tools, otherwise why bother buying them right? any sound investment would be made on the basis of being backed by a revenue plan and the easiest way to do that would be to say, in the next X years we can sell Y number of compilers for Z dollars and show a return on investment. Can you imagine an investor saying yes to “Lets by HI-TECH for $20M (I just made that number up) so we can refocus their efforts on Microchip parts only and then give these really great compilers and libraries away!”, any sensible investor or finance person would probably ask the question “why would we do that?” or “where is the return that justifies the investment”. But, was expanding revenue the *real* reason for Microchip buying HI-TECH or was there an undercurrent of need to have the quality the HI-TECH compilers offered over the Microchip Compilers, it was pretty clear that Microchip themselves were way behind – but that storyline would not go down too well with investors, imagine suggesting “we need to buy HI-TECH because they are way ahead of us and we cannot compete”, and anyone looking at that from a financial point of view would probably not understand why having the tools was important without some financial rationale that shows on paper that an investment would yield a return.

Maybe Microchip bought HI-TECH as a strategic move to provide better tools for their parts but I am making the assumption there must have been some ROI commitment internally – why? because Microchip do have a very clear commercial strategy around their tools, they provide free compilers but they are crippled generating unoptimised code, in some cases the code generated has junk inserted, the optimised version simply removes this junk! I have also read somewhere that you can hack the setting to use different command-line options to re-optimise the produced code even on the free version because at the end of the day its just GCC behind the scenes. However, doing this may well revoke your licences right to use their libraries.

So then, are Microchip in the tools business? Absolutely not. In a letter from Microchip to its customers after the acquisition of HI-TECH [link] they stated that “we will focus our energies exclusively on Microchip related products” which meant dropping future development for tools for other non-Microchip parts that HI-TECH used to also focus on. As an independent provider HI-TECH could easily justify selling their tools for money, their value proposition was they provided compilers that were much better than the “below-par” compilers put out by Microchip, and being independent there is an implicit justification for them charging for the tools – and as a result the Microchip customers had an choice – they could buy the crappy compiler from Microchip or the could buy a far superior one from HI-TECH – it all makes perfect sense. You see, you could argue that HI-TECH only had market share in the first place because the Microchip tools were poor enough that there was a need for someone to fill a gap. Think about it, if Microchip had made the best tools from day one, then they would have had the market share and companies like HI-TECH would not have had a market opportunity – and as a result Microchip would not have been in the position where they felt compelled to buy HI-TECH in the first place to regain ground and possibly some credibility in the market. I would guess that Microchip’s early strategy included “let the partners/third parties make the tools, we will focus on silicon” which was probably OK at the time but the world moved on and suddenly compilers and tools became strategically important element to Microchip’s go-to-market execution.

OK, Microchip now own the HI-TECH compilers, so why should they not charge for them? HI-TECH did and customers after all were prepared to pay for them so why should Microchip now not charge for them? Well I think there is a very good reason – Microchip NEED to make tools to enable the EE community to use their parts in designs to ensure they get used in products that go to market. As a separate company, HI-TECH were competing with Microchips compilers, but now Microchip own the HI-TECH compilers so their is no competition and if we agree that Microchip *MUST* make compilers to support their parts, then they cannot really justify selling them in the same way as HI-TECH was able to as an independent company – this is especially true given the fact that Microchip decided to obsolete their own compilers that the HI-TECH ones previously competed with – no doubt they have done this in part at least to reduce the cost of (and perhaps reduced the team size needed in) maintaining two lots of code and most likely to provide their existing customers of the old compilers with a solution that solved those outstanding “old compiler” issues. So they end up adopting a model to give away limited free editions and sell the unrestricted versions to those customers that are willing to pay for them. On the face of it thats a reasonable strategy – but it alienates the very people they need to be passionate about their micro-controller products.

I have no idea what revenues Microchip derives from their compiler tools – I can speculate that their main revenue is from the sale of silicon and that probably makes the tools revenue look insignificant. Add to that fact the undesirable costs in time and effort in maintaining and administering the licences versions, dealing with those “my licence does not work” or “I have changed my network card and now the licence is invalid” or “I need to upgrade from this and downgrade from that” support questions and so on….this must be a drain on the company, the energy that must be going into making the compiler tools a commercial subsidiary must be distracting to the core business at the very least.

Microchip surely want as many people designing their parts into products as possible, but the model they have alienates individual developers and this matters because even on huge projects with big budgets the developers and engineers will have a lot of say in the BOM and preferred parts. Any good engineer is going to use parts that they know (and perhaps even Love) and any effective manager is going to go with the hearts and minds of their engineers, thats how you get the best out of your teams. The idea that big budget projects will not care about spending $1000 for a tool is flawed, they will care more than you think. For Microchip to charge for their compilers and libraries its just another barrier to entry – and that matters a lot.

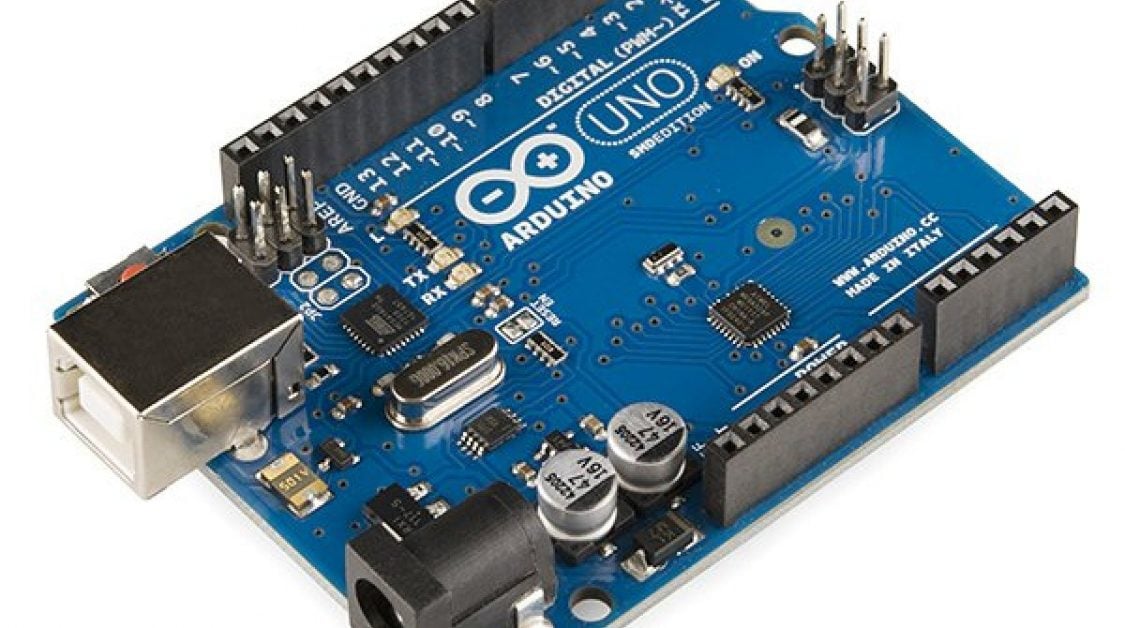

So where is the evidence that open and free tools matter – well, lets have a look at Arduino – you cannot help but notice that the solution to almost every project that needs a micro-controller these days seems to be solved with an Arduino! and that platform has been built around Atmel parts, not Microchip parts. What happened here? With the Microchip parts you have much more choice and the on-board peripherals are generally broader in scope with more options and capabilities, and for the kinds of things that Arduino’s get used for, Microchip parts should have been a more obvious choice, but Atmel parts were used instead – why was that?

The success of the Arduino platform is undeniable – if you put Arduino in your latest development product name its pretty much a foregone conclusion that you are going to sell it – just look at the frenzy amongst the component distributors and the Chinese dev board makers who are all getting in on the Arduino act, and why is this? well the Arduino platform has made micro-controllers accessible to the masses, and I don’t mean made them easy to buy, I mean made them easy to use for people that would otherwise not be able to set up and use a complex development environment, toolset and language, and the Arduino designers also removed the need to have a special programmer/debugger tool, a simple USB port and a boot-loader means that with just a board and a USB cable and a simple development environment you are up and running which is really excellent. You are not going to do real-time data processing or high speed control systems with an Arduino because of its hardware abstraction but for many other things the Arduino is more than good enough, its only a matter of time before Arduino code and architectures start making it into commercial products if they have not already done so. There is no doubt that the success of the Arduino platform has had a positive impact on Atmel’s sales and revenues.

I think I was pretty close to the mark when I was thinking that because Atmel used an open toolchain based on the GCC compiler and open source libraries, when the team who developed the Arduino project started work on their Arduino programming language, having the toolchain open and accessible probably drove their adoption decision – that was pure speculation on my part and that was bugging me so I thought I would try to find out more.

Now this is the part where the product team, executives and the board at Microchip should pay very close attention. I made contact with David Cuartielles who is Assistant Professor at Malmo University in Sweden, but more relevant here is that he is one of the Co-founders of the original Arduino project. I wrote David and asked him…

“I am curious to know what drove the adoption of the Atmel micro controllers for the Arduino platform? I ask that in the context of knowing PIC micro controllers and wondering with the rich on-board peripherals of the PIC family which would have been useful in the Arduino platform why you chose Atmel devices.”

David was very gracious and responded within a couple of hours. He responded with the following statement:

“The decision was simple, despite the fact that -back in 2005- there was no Atmel chip with USB on board unlike the 18F family from Microchip that had native USB on through hole chips, the Atmel compiler was not only free, but open source, what allowed migrating it to all main operating systems at no expense. Cross-platform cross-compilation was key for us, much more than on board peripherals.”

So on that response, Microchip should pay very close attention. The 18F PDIP series Microcontroller with onboard USB was the obvious choice for the Arduino platform and had the tooling strategy been right the entire Arduino movement today could well have been powered by Microchip parts instead of Atmel parts – imagine what that would have done for Microchip’s silicon sales!!! The executive team at Microchip need to learn from this, the availability of tools and the enablement of your developer community matters – a lot, in fact a lot more than your commercial strategy around your tooling would suggests you might believe.

I also found this video of Bob Martin at Atmel stating pretty much the same thing.

So back to Microchip – here is a practical example of what I mean. In a project I am working on using a PIC32 I thought it would be nice to structure some of the code using C++, but I found that in order to use the C++ features of the free XC32 compiler I have to install a “free licence” which requires me to not only register my personal details on the Microchip web site but also to tie this request to the MAC address of my computer – so I am suspicious, there is only one purpose for developing a mechanism like this and thats to control access to certain functions for commercial reasons. I read on a thread in the Microchip forums that this is apparently to allow Microchip management to assess the demand for C++ so they can decide to put more resources into development of C++ features if the demand is there – a stock corporate response – and I for one don’t buy it. I would say its more likely that Microchip want to assess a commercial opportunity and collect contact information for the same reasons, perhaps even more likely for marketing to feed demand for a sales pipeline. Whats worse is after following all the instructions I am still getting compiler errors stating that my C++ licence has not been activated. Posting a request for help on the Microchip forum has now resolved this but it was painful, it should have just worked – way to go Microchip. Now because of the optimisation issues, I am now sitting here wondering if I should take the initiative and start looking at a Cortex M3 or and Atmel AVR32 – I wonder how many thoughts like this are invoked in Microchip customers/developers because of stupid tooling issues like this?

You do not need market research to know that for 32-bit Micro-controllers C++ is a must-have option in the competitive landscape – not having it leaves Microchip behind the curve again – this is not an extra PRO feature, it should be there from the off – what are you guys thinking! This position is made even worse by the fact that the XC32 toolchain is built broadly around GCC which is already a very good C++ compiler – why restrict access to this capability in your build tool. If Microchip wanted to know how many people want to make use of the C++ compiler all they need to do is ask the community, or perform a simple call-home on installation or even just apply common sense, all routes will lead to the same answer – none of which involve collecting peoples personal information for marketing purposes. The whole approach is retarded.

This also begs another question too – if Microchip are building their compilers around GCC which is an open source GPL project, how are Microchip justifying charging a licence fee for un-crippled versions of their compiler – the terms of the GPL require that Microchip make the source code available for the compilers and any derived works, so any crippling that is being added could simply be removed by downloading the source code and removing the crippling behaviour. It is clear however that Microchip make all the headers proprietary and non-sharable effectively closing out any competitor open source projects – thats a very carefully crafted closed source strategy that takes full advantage of open source initiatives such as GCC, not technically in breach of the GPL license terms but its a one-sided grab from the open source community and its not playing nice – bad Microchip…..

Late in the game, Microchip are trying to work their way into the Arduino customer base by supporting the chipKIT initiative. It is rumoured that the chipKIT initiative was actually started by Microchip to fight their way back into the Arduino space to take advantage of the buzz and demand for Arduino based tools – no evidence to back it up but seems likely. Microchip and Digilent have brought out a 32bit PIC32 based solution, two boards called UNO32 and Max32, both positioned as “a 32bit solution for the Arduino community” by Microchip, these are meant to be software and hardware compatible although there are the inevitable incompatibilities for both shields and software – oddly they are priced to be slightly cheaper than their Arduino counterparts – funny that 🙂

Here is and interview with Ian Lesnet at dangerousprototypes.com and the team at Microchip talking about the introduction of the Microchip based Arduino compatible solution.

There is also a great follow-up article with lots of community comment all basically saying the same thing.

http://dangerousprototypes.com/2011/08/30/editorial-our-friend-microchip-and-open-source/

Microchip have a real up hill battle in this space with the ARM Cortex M3 based Arduino Due bringing an *official* 32bit solution to the Arduino community. Despite having an alleged “open source” compiler for the chipKIT called the “chipKIT compiler” its still riddled with closed-source bits and despite the UNO32 and MAX32 heavily advertising features like Ethernet and USB (which Microchip are known for great hardware and software implementations) these are only available on the UNO32 and MAX32 platforms if you revert back to Microchip’s proprietary tools and libraries – so the advertised benefits and the actual benefits to the Arduino community are different – and thats smelly too….

OK, so I am nearing the end of my rant and I am clearly complaining about the crippling of Microchip provided compilers, but I genuinely believe that Microchip could and should do better and for some reason, perhaps there is a little brand loyalty, I actually care, I like the company and the parts and the tools they make.

One of my own pet hates in business is to listen to someone rant on about how bad something is without having any suggestions for improvement, so for what its worth, if I were in charge of product strategy at Microchip I would want to do the following: –

- I would hand the compilers over to the team or the person in charge that built the MPLAB-X IDE and would have *ALL* compilers pre-configured and installed with the IDE right out of the box. This would remove the need for users to set up individual compilers and settings. Note to Microchip: IDE stands for Integrated Development ENVIRONMENT, and any development ENVIRONMENT is incomplete in the absence of a compiler

- I would remove all crippling features from all compilers so that all developers have access to build great software for projects based on Microchip parts

- I would charge for Priority Support, probably at comparable rates that I currently charge for the PRO editions of the compilers – this way those companies with big budgets can pay for the support they need and get additional value from Microchip tools, while Microchip can derive its desired PRO level revenue stream without crippling its developer community.

- I would provide all source code to all libraries, this is absolutely a must for environments developing critical applications for Medical, Defence and other systems that require full audit and code review capability. By not doing so you are restricting potential market adoption.

- I would stop considering the compilers as a product revenue stream, I would move the development of them to a “cost of sale” line on the P&L, set a budget that would keep you ahead of the curve and put them under the broader marketing or sales support banner – they are there to help sell silicon – good tools will create competitive advantage – I would have the tools developers move completely away from focusing on commercial issues and get them 100% focused on making the tools better than anything else out there.

- I would use my new found open strategy for tooling and both contribute to, and fight for my share of the now huge Arduino market. The chipKIT initiative is a start but its very hard to make progress unless the tool and library strategy is addressed

Of course this is all my opinion with speculation and assumptions thrown in, but there is some real evidence too. – I felt strongly enough about it to put this blog post together, I really like Microchip parts and I can even live with the stupid strategy they seem to be pursuing with their tools but I can’t help feeling I would like to see Microchip doing better and taking charge in a market that they seem be losing grip on – there was a time a few years ago where programming a Micro-controller and PIC were synonymous – not any more, it would seem that Arduino now has that. All of that said, I am not saying that I do not want to see the Arduino team and product to continue to prosper, I do, the founders and supporters of this initiative, as well as Atmel have all done an amazing job at demystifying embedded electronics and fuelling the maker revolution, a superb demonstration of how a great strategy can change the world.

If I have any facts (and I said facts, not opinion) wrong I would welcome being corrected – but please as a reminder, I will ignore any comments relating to Atmel is better than PIC is better than MPS430 etc…that was not the goal of this article.

Please leave your comments on the article

This content is published under the Attribution-Noncommercial-Share Alike 3.0 Unported license.

HI Gerry,

Having just started to install the Microchip IDE last week and looking into these issues myself i can not agree more with your prognosis,

Very well said, i hope someone at Microchip read and take note of your comments,

Keep up the great work,

Many thanks

Dave.

Thanks Dave, bit long that article but I think its worth saying. Good luck with getting up and running. For what its worth, if you are starting out from scratch you only want the XC compilers, they are the best. Gerry

I think you have hit the mark dead on, if you main income is from selling silicon ( and i DO like the silicon ) why are for what ( i think ) can only be a tiny extra boost in the botem line would you

restrict the performance of the silicon by making anything but the best compilers your dev team can create and releasing them for free. I and a lot of people like me may play with microchip products and only use the ‘free’ versions of the tool-chains ( its that cost thing! ) but if in a day job i was asked to suggest a chip for a project i would have to qualify my choice with the note that it will cost xx more for the toolset for each developer due to licensing issues. That of course would be a missed opertunity to get a microchip in the door. And of course i might develop a product idea but conclude on the basis of testing with the free compiler that the tested micro was not up to the task when in fact it might be more than up to the job.

Span,

Yes indeed, for the life of me I cannot see how Microchip’s strategy makes sense, the only explanation from deriving revenues from the compilers is to justify purchasing Hi-Tech but thats flawed, as I said in the post Hi-tech as an independent had the right to charge but only because they were providing something that was much better than the crap that Microchip were putting out – but now Microchip own Hi-Tech it does not make sense to charge because they are no longer putting out crap to compete with. Seems Stupid to me..

Gerry

I would love to see a senior microchip rep respond to the points you make…

Span, yeah, not likely though…:)

Gerry

Gerry — I really like how passionate you are about PIC. They can do amazing things in tiny, cheap packages, so I understand why thier users are so keen on them. I looked at PIC, but their support for non-Windows systems wasn’t there at the time. Also, PIC C code tends to dive into low level very quickly, so you have to develop a really deep understanding of a particular chip to understand it.

Having gcc for PIC would have definitely swayed the Wiring/Arduino team’s choice, and it’s a shame that Microchip saw its development as a threat, rather than an opportunity. If the official Microchip compilers really are modified versions of gcc, then hobbling the code from them is contrary to the spirit of the GPL.

Since almost every other µC manufacturer has embraced open tools, it may be a little late for Microchip to join the movement for PIC. Me, I’m rather glad that I can develop the same code and run it on ATtiny, MSP430, ATMega and ARM. Sure, it may seem wasteful of hardware for EE types, but I get my prototyping done without learning the specific line-noise for each processor. My brain’s already got too many Z80 opcodes and timings lurking uselessly from my teenage years!

Hi Stewart,

Thanks for the comments, yeah I do like the parts, they are really great – I also admire the way they have not *yet* jumped on the ARM bandwagon, their 32-bit micro’s are pretty good contenders in the 32-bit space, but t hey are just their own worst enemy. They have some of the best software stacks for ethernet, USB and so on and I imagine they are fearful that if they went open-source that would erode their value proposition if their libs were ported to other platforms, of course they could always close source those libs and restrict free use to their platform only, with paid use on other platforms. There would be all sorts of ways to make it work without strangling your community of developers – its just odd….

Gerry

Hi Gerry

I could not agree with your more. I am jumping for joy that you wrote this article.

I am blessed to be able to develop with PIC everyday, along with the other major chip in my stable, the Cypres PSoC.

I have had the same argument with Cypress over the past few years, in fact I managed to speak to the very top guy in Cypress responsible for Developer Tools, Alan Hawse. I argued the point that paying $1000 for a compiler license just made me choose other manufacturers. They have since made their compilers free and based on GCC, whereas previously they were licensed from Keil and the end user had to pay for a full license. However their USB Chips still use a proprietary compiler with the free version being crippled at less that 4KB (Yes 4000 Bytes) of compiled code – you can’t even run the basic ‘Hello World’ demo that comes with the dev kit!

Getting back to Microchip, I would like to add my support to your campaign. I would joint forces with you to help encourage Microchip and others to offer great quality, free development tools, as it can only drive sales upwards. Although my experience with Microchip is that the accountants run the business, not the managers and staff who know the industry and it’s players.

Hi Kenny,

Thanks for the comments, glad you liked the article. I suspect we are flogging a dead horse here, as you say Microchip must surely know what they are doing, its obviously their strategy and thats fair enough, they have the right to have their own strategy – but it sucks IMHO.

Gerry

It helps your case (especially with a rant) to get this term correct… (heh, heh)

http://grammarist.com/spelling/silicon-silicone/

Hi Chuck,

In fact I managed to spell it both ways in that article but have corrected it now, thanks for pointing it out. I am a bit lazy when it comes to spelling I have to say, I think I type faster than my brain can process what I am saying. I would hope that the article in general terms would stand up above an incorrect spelling of a word 🙂

Gerry

You’re absolutely right Gerry…the article does stand on it’s own… and I couldn’t agree with you more. In my experience with companies which make these kind of marketing mistakes it means there’s somebody in a position of power who can’t accept or acknowledge they’ve made an error…so they hang on to their mistake hoping that time will fix the issue. It only gets fixed when enough customers make it clear there’s a problem.

I apologize for not saying so in my first post. I’m just particularly sensitive about that particular mis-spelling. Often, but not in your case, folks make the mistake both in spelling and spoken (since they sound different), and then it really bugs me…

Hi Chuck, no problem at all, and no apology necessary my friend. I am one of those folk who is completely de-sensitised to miss-spellings, so long as I understand the message I generally don’t even notice spelling – not even my own obviously 🙂 Gerry

One other spelling error – in the middle of the last paragraph, you wrote “loosing” instead of “losing.”

Thanks, corrected 🙂

Hi Gerry

Good to see you back

I initially started with ATMEL AVR and used it for several years on hobbyist level, then because of shortage, some price fluctuations and mostly inferior peripherals I tried to migrate to PIC, that was around the time that MPLAB-X IDE had just came out. I installed the thing but coudnt configure the damn thing to work properly, at the same time i tried Cortex M3 parts and everything worked flawlessly,and opensource libraries were a bonus too so i stick to the Cortex M3 and i dont think i will be going back and even if i need a really low price micro, the good old AVR would just do the trick 🙂

Hi Ali,

Thanks for the comments. It does not surprise me that the tools are off-putting, the MPLAB-X is actually really well implemented but the compilers being separate you have to jump through some hoops to get it working, and at the time I started using it Microchip were not exactly clear about which compiler to choose, but I think time has corrected that particular concern with the XC compilers being the ones to use for new projects. I would like to play around with Cortex-M3 / ARM microcontrollers at some point, I am curious to know what development environment you use – from what I can tell for ARM development the only industry strength development environments are only available as commercial options, Kiel for example..

Gerry

I am currently using Keil, but I believe there is a very good GCC compiler with the Eclipse IDE for ARM development, I wanted to give it a try but mostly because of laziness i havent yet.

I tried ST, Atmel and LPC parts, and I personally prefer ST parts. there is this awesome standard peripheral library driver for ST and LPC parts which speed up the software development considerably.

Hi Ali,

Thanks for the info, I will keep it in mind.

Gerry

I completely agree with you. I too, like Microchip MCUs and am particularily drawn to them, not by price or internal architecture, but becuse of the third party support, complilers, etc.

Back in the 90’s In the early days of FPGAs, I designed a synchronous demodulator in a Xilinx 3000 series part with free, yet buggy tools. When new tools were released, Xilinx tried to charge me to get the new tools. I called a meeting and told them to go pound sand…I was not going to give them a purchase order for something that should be free. After all, the tool was specific to their silicon so what good was it? It only helped them sell more parts. After the meeting, I instructed them to leave the building and NEVER come back. The next day I received an entire set of pro SW tools delived to my office. Lesson: Don’t let any company hold your design hostage….and that includes Microchip.

Bob,

Thats a great story and right you were. I think the problem with silicon makers is they see throe parties selling tools for their stuff than then think they should be able to do the same, but as the maker of the silicon they cannot – not if they want to sell their parts that is.

Gerry

Dave Jones……certainly you’ve seen this. Clearly no love lost for Microchip. This is a classic. http://youtu.be/LjfIS65mwn8

Hi Bob,

Yes I have seen Dave’s video, good rant at Microchip…

Gerry

I wanted to start with micro controllers few years before Arduino became popular.

As a hobbyist I was presented with the choice of PIC vs AVR. At that time it seemed that PIC was the best choice. Most nice projects on the internet used them. There was large community of users. Unfortunately when I got into details it became clear that PIC is a mess for beginner trying to learn from the web. Several families of chips with different cores, very poor C support at that time, different compilers, contradicting opinions on the forums. It was very hard to choose anything.

AVR on the other hand was simple and very friendly. Single core on all chips, basically designed for C, AVR Studio was free and integration with GCC was a breeze. You can argue that single solution for everything was limiting, but when I looked over some comparisons of the hardware AVR didn’t lack too much in capabilities where it was important. I did my research over the course of several months and it became clear that AVR is quickly gaining popularity due to that openness and simplicity.

What I want to point out, even few years before Arduino there was a move away from PIC towards friendlier and more open environment. Arduino is a result of entire generation of students and hobbyists looking for easier way into MCUs. My friends did exactly the same thing. Microchip was sleeping for a very long time and now they have to pay for it.

I’m afraid that it might be too late for PIC to regain its losses. There are great alternatives right now. PIC is just one of many.

Asked Microchip on twitter, didn’t get much other than marketing fluff:

https://twitter.com/neslekkim/status/427727536752033793

No surprise for the generic responses from Microchip, I imagine they get this question a lot. Nice one for trying though…

Gerry

I completely agree with you Gerry.

I think Microchip should get their act together on their toolchain. They provide very interesting parts (peripheral set, 16-bit parts, USB, Ethernet, CAN, etc.), they promote their parts via sampling (yes, I do think it’s OK for students of hobbyists to use the service, only if they order a PCB for their project at the same time of sampling..), their hardware tools have the right price, etc.

But in my opinion, their toolchains are buggy and unsupported. I hear you have not got many issues any more with MPLAB X, so this may be concentrated on the PICKIT’s: but have found it hopeless for debugging. I have recently bought a PICKIT3 and only been able to use it with the PICKIT 3 standalone program (which adds no functionality to PICKIT 2 standalone program). MPLAB X either loses connection, “needs a memory object” or can’t figure out which line of C code it is debugging.

Having developed a more in depth experience with ARM + IAR + J-LINK (on internships), I must say MPLAB X debugging experience is a heap of garbage, and was much quicker and less buggier on ARM.

The crippling of the compilers is a joke. Who in their right minds sells parts of different FLASH sizes, but at the other side of the sale also tries to get more profit by selling compilers with “40% code optimization” thus potentially making parts with double FLASH size redundant. Where the only thing they do is cripple the GCC “clean up” routines.

I think you hit the nail right on the head with your blog post, they should remove this idiocy if they want to be taken seriously on the market.

If people ask me about Microchip parts (you know the fan boys), and I tell them there are free C compilers around. “Oh really? Are there any limitations?”, well euhrm, you can compile C but it can generate large, weird & retarded assembler output, unless you break the bank. “Oh Hmpf, I will stick to some ATTINY’s then”. Not impressed.

Hans,

Thanks for your comments, and yes you are right about the buggy tools. MPLAB-X does provide a pretty stable environment but the hardware debugging is not only hopeless but I find in micro-controllers pointless too, at least in my experience. I prefer to use other more reliable debugging strategies in developing micro-controller code, I use an ICD3 and I never use if for debugging because every time I try it never works. I hate the way the programmer sometimes fails to program a chip and then you have to disconnect and re-connect it to reset the USB driver, and on my iMac if I have an SD card in the card slot the ICD looses the plot and starts to report system errors saying the disk drive has been removed (so the ICD3 seems to pretend its a disk drive or flash drive or something). So you are right, the tools are not perfect but once you know the problems and the work-arounds they are pretty stable – or at least the development workflow can be. Gerry

Somehow I am glad to hear you also have problems with debugging with MCP tools 😉 At least I know I am not the only one. But I guess we also are working on different platforms (Win 7 vs Mac)

Anyway, the “quirks” you describe can be manageable, but initially are very frustrating, time consuming and confusing. I think at those times they may lose people when they don’t want to fight (yet another) debugger or IDE.

I find the quickest development cycle on PIC is to develop with a serial port and just program new images, because launching a debug session with a PICKIT is rather slow. This works pretty well on small projects, and reasonably well on medium sized projects.

However in my case: I recently wanted to debug a PIC24FJ64GB004 chip that was running a small webserver and wanted to debug SPI problems for a RF transceiver (MRF49XA/RFM12 ones).

Both parts (ethernet/RF) use the same SPI peripheral; so the webserver may be using the SPI bus while the RF ISR needs to write/read some registers ASAP. Maybe not very practical if I knew the RF transceiver needed this strict ISR SPI access, but oh well.

Once things get out of hand and start randomly resetting, it would be very useful to know at some part where to look: is it my ethernet stack? RF stack? Some part of the ISR routine (can’t really do much printfs there) RTOS? What kind of fault?

It is very frustrating that (after it 2 crashes while updating some PICKIT firmware…) , a debug session slowly launched, and then you get the error: “can’t set breakpoint – can’t translate/look-up C line to assembler output”. All optimizations were turned off and it just couldn’t figure it out anymore what’s where on a medium sized project (I guess only 50% usage on a 64K part of medium sized, isn’t it?).

I had to manually go through the linker map, break the assembler address line, and debug the assembler. Not very pretty.

That is not what I expect. I have been able to use IAR for a while (and still do). First experience was with a Olimex J-LINK clone debugger. It came with this “jlink replacement DLL” which I had to put into IAR. It works.. the debugger launched quickly, it programmed chips, could halt practically anywhere, could read practically every register & piece of memory, etc. Wonderful.

Until you close the debug session, you need to replug the Olimex tool USB cable, otherwise it could not launch a new debug session.

Once I knew that, not so bad. I got accustomed to it. USB connectors quickly wear out so replugging becomes effortless….. 😉

Later on we did order some “real” J-LINK’s, because we needed more debuggers and couldn’t really say Olimex was worth the money. Those tools worked flawlessly, no complaints at all.

And I recently saw you can get those J-LINK tools in an educational version (for us students/hobbyists) too, for less than the price of any ICD.

Hi Hans,

Thank you for posting the information.

Gerry

I doubt Microchip will change their tune (except maybe adding a few optimizations to the free 8-bit compiler, which needs it the most) unless the beancounters can be convinced part sales will increase enough to cover the loss of income from compiler license sales. I don’t think they’ll ever feel enough threatened by the competition to give the tools away purely out of desperation.

Although it sucks to have the limitations there, in all honesty the optimizations provided in the 16- and 32-bit compilers are enough for the vast majority of users, especially hobbyists. The biggest loss are the size optimizations, performance improvements quickly taper off. The flipside is that debugging and code inspection becomes a lot harder, as GCC can transform the code beyond recognition when pulling out all the stops.

Lastly, I have to say I’m happy the 8-bit PICs weren’t chosen for Arduino. The architecture is such a poor fit for C, and the compiler must impose such limitations and nonstandard behaviour just to work that it would never have gained such wide-spread acceptance. Microchip have lately adopted the chipKIT and Fubarino boards as their own Arduino-likes (baed on the PIC32), but yeaaaaah…

I agree, i doubt Microchip will change their strategy, and at the end of the day they have the right to adopt whatever strategy they like, as customers we can always choose to use other parts.

The optimisation point I disagree with though, just because I am a free compiler user does not mean I want or can live with no-ops or other CPU cycle consuming stuff into the generated code, its nice to be able to optimise for performance in ISR’s and other tight processing, it seems unjust to cripple in this way. I do agree for hacking around you can simply buy a bigger faster chip but its more the principle. IMHO Microchip are short-sighted here.

PIC’s architecture is less C friendly but in their defence Microchip have demonstrated that with the right compiler approach this is mostly transparent although you do need to be mindful of ROM/RAM as they are addressed differently. Does not make it impossible or even bad, but you are right that its not ideal.

Gerry

Amen brother.. I too love the silicon and hate the support/development environment. I recently upgraded to MPLAB X IDE to work on a new DSPic project and haven’t been able to see variables in the debugging environment ever since. 🙁 .

I prefer writing in assembler so am less concerned about free C compliers, while from where I sit it looks like support for the C coders is better than us lowly bit pushers get.

We (developers of embedded products) should be the customers that Microchip wants to keep happy… It keeps getting harder and harder to be happy using them

John Roberts

PS I really really like the silicon.. The DSP with 256x up-sampled 16 B dac built-in is really sweet. Developing code blind to variables is not so sweet. Hopefully they’ll finish connecting all the dots in MPLAB X IDE variable visibility some day soon.

Hi John,

Thanks for the comments. I have never played with the DSPic’s, have not had a project that needed one 🙁 I used to program in assembler, in fact thats how I started programming, Z80 and 6502’s and then 80×86 for some years too, I used to mesmerise my buddies with my few hundred byte programs that done all sorts of apparently clever stuff, all written in assembler (MASM 5/6) but that all stopped when I mastered C, I could not imagine writing anything other than a critical ISR in assembler now and even then I would probably write in C, de-compile and hand optimise, just for speed of development.

Gerry

A great example for Microchip to follow would be Silicon Labs (whose devices I use all the time). I hopped on their bandwagon when the microcontroller business was still Cygnal. You couldn’t beat a development kit for $99, and the $39 programmer/debugger dongles. Those of us who used the old 80C51 (or the 87C51) and the delicate flower called the $5000 NOHAU emulator literally JUMPED FOR JOY when the first Cygnal dev kits showed up on our desks.

Anyways, let’s see, SiLabs has always shipped the dev kits with a 4k-byte version of the Keil tools and they’ve also always supported SDCC and IAR. And now they’ve gone a step further, giving away a version of the Keil dedicated to their 8051s, and they’re working on a new Eclipse-based IDE which will use Keil or SDCC or whatever other compiler you like.

And when they first announced their Cortex-M3 parts, they made clear that the software tools would be free and not crippled.

That’s how you get new customers and keep your existing customers happy.

Andy,

Agreed, thats a much more sensible strategy to adopt. I guess Microchip might be a little complacent, they continue to grow and are ranked number 1 or 2 with their 8-bit micro so they probably (and possibly justly) think that their strategy is sound – which actually is hard to argue given their financials. It just feels like they could do better by their customers, as I described there are many ways to generate the tools revenues through support and other value add services.

Gerry

Hi,

Possibly I’m not reading correctly, but I understand your 4th paragraph to state that XC8, XC16, and XC32 are based on the HI-TECH compilers. It’s my understanding (please tell me if I’m wrong) that only XC8 is based on the HI-TECH compiler. Both XC16 and XC32 are gcc-based.

Thanks,

Dave

Hi Dave,

Thats a very good question. I have *assumed* that the XC series of compilers are based on the HI-TECH compilers simply because they seem to be unified, use the same header/config bits style and approach. It is possible that the HI-TECH 32 bit compiler was based on the gcc compiler but I don’t know that. Its pretty clear that the XC series of compilers came after Microchip bought HI-TECH which is what has lead me to that conclusion, but thats only based on my own understanding and assumptions, not fact. Perhaps someone knows for sure and can comment?

Gerry

XC8 is a merging of HI-TECH’s PICC and PICC-18 products. The HI-TECH compilers for Microchip’s 16-bit and 32-bit MCUs, just like the HI-TECH compilers for all other architectures, had no connection to GCC and were retired after the acquisition.

XC16 and XC32 are GCC-based with no HI-TECH connection.

Hi Mark,

The XC8 compiler and libraries are definitely an improvement over the old PICC-18 compiler I used to use with the old MPLAB IDE, its interesting that you guys acquired HI-TECH just for the PICC 18 technology. Thank you for the clarification, thats interesting to know…

Gerry

Hello

You have resumed my last 2 weeks thoughs excellently. I’m also thinking about using AVR or Cortex… due to the tools. Microchip should open their compilers, it’s a must.

If they earn money selling hardware it has no sense crippling the compilers (apart of the support), when the expected revenue of selling compilers should be 100 times lower.

I have designed and built compilers… i have checked the code generated by XC8 in free/std/pro modes. The generated code is suspicious in free mode, looks done on purpose introducing absurd instructions, jumps, etc. that only makes the code grow without reason. I don’t talk about optimizations in free mode, just there are really crappy and no-sense instructions generated when compiling (ie: moving a data to W, then to a GPR to move again to W…for a simple C instruction).

I’m not the only one thinking about migrating to others MCUs due to the tools Microchip is providing… and the main reason is free not crippled compilers. MCUs need software, software need some enviroment for development, usually IDE+compiler. Therefore if you want to sell MCUs you have to supply good IDE+compiler to easier the development process, otherwise even having better hardware resources probably the cutomer will migrate to not so good hardware but much better software tools that reduce the development process making the ROI increase faster.

For now i’ll stay with microchip devices but just because i know them very well (and like them), but i’m starting to look for alternatives with better software tools.

I hope Microchip doesn’t loose this opportunity…

Couldn’t agree more.

I do like Microchip microcontrollers. A lot. They have an incredibly broad range of parts with truly killer features, IMHO way ahead of competition if you exclude the relatively recent Cortex-M0 and Cortex-M3 parts from other vendors. But the availability of GCC for AVR and for Cortex tips the balance for me. It’s really that simple.

I recently went half way through the development of a new system. I had decided to use the PIC32MX220 part because it had some very specific features I needed, but the more I progressed through the design of the electronics the more uncomfortable I was feelink about starting off again with the Microchip crippled IDE/Compiler combo. At some point I found a workaround to implement this particular feature I needed with an STM32 part, for which I had already been using an Eclipse+GCC+OpenOCD setup, and decided right away to go through the trouble of replacing the microcontroller.

As sad as it sounds (because again, their microcontrollers are awesome), I’m not looking back to Microchip until there’s a free full fledged compiler. The competition does have it, and Microchip gets its revenue from selling parts. What are they waiting for?.

HI Nacho,

Thanks for the comments, your story would appear to be more common than I had originally thought, Microchip are not reacting and thats plain dumb on their part – they are hearing that they are loosing a battle and they are sitting there letting it happen – they clearly think their strategy is OK. I have not yet made the transition but I do have an array of other non PIC micro controller eval boards to play with.

Gerry

As a historical note, back in 2008 some people from Microchip did some work on a PIC backend for the LLVM compiler. I recall that support was very sketchy still back then and looking at the source repository now it seems they abandoned this effort again in 2010:

https://github.com/llvm-mirror/llvm/commits/master/lib/Target/PIC16

https://groups.google.com/d/msg/llvm-dev/w4gbGM8co_w/Pcoao0hZ4JoJ

Nice Rant Gerry

Echos my opinions quite well Microchip should take note and maybe sack a few MBA’s to pay for it, the return on Investment will be worth it in the long run 🙂

Boz

Hi Boz, thanks for the feedback. Perhaps one of the MBA’s ought to point them in the right direction 🙂 Gerry

The story of my life! I started with my all time favorite PIC16F628A many years back. Way before the Arduino craze began. I spent many sleepless nights digging through the datasheets and studying the micro-controller architecture. But deep down, the fact that Microchip’s tools were so crippled and the “alien” architecture irked me to no end. After two years, realized that I was hopelessly headed towards a dead-end. It was a bitter disappointment to discover that the path I was on didn’t lead to anywhere close to where I wanted to be in the future. What good is to be a master of a skill if there is no room to grow? I wanted to invest my precious time on acquiring skills that I could carry over to different areas that I was interested in: Robotics, Machine Learning, Embedded Linux, Parallel Computing, Machine Vision etc. So with great pain, I switched to AVR micro-controllers and started all over again (with Eclipse, GCC based Standard C99, GDB). Six years down the line, I now think that was the best decision that I had ever made. And here is why. Recently, I started playing with ARM Cortex M0, and every minute I had spent on learning and mastering the FOSS tools paid off many times over. I was able to port my code base with minimal and sometimes no effort at all. Never will I allow any company to jail me in their walled gardens. Ever. My 2 cents.

Thanks for the comment, I hear you. Gerry

Hi Gerry, Firstly the Resistance box, Excellent used daily, and I have bought some more jumpers for spares, Now pic chips, I love to get into this as there are many radio amateur projects that use pic chips,and there is my problem I’ve never had anything to do with them and wouldn’t know where to start, I sure there must be a programmer module for these devices, and tutorial on getting started !!!

Hi Paul,

Thanks for the feedback on the resistor, glad you find it useful. Yeah more people should use micro controllers, they are as ubiquitous (and as cheap) today as the 555 was 20 years ago but there is a big ramp-up time to deal with tools, environment, workflow, language and general experience. I am really wanting to do a video series on using micro-controllers to help people who have electronics experience but not micro-controller experience. I have to spend some time devising the content and right now by day job is consuming much of my time.

Gerry

It’s an old saw, or adage in silicon valley, hardware doesn’t sell itself. I recently started in uC programming/learning and I chose atmel because I read about these kinds of issues. There are too many choices for people like me and so when I see licensing or other corporate nonsense that choice gets a big red flag and usually falls of my list of choices. It was not that easy getting started with the choice I made but at least I didn’t have deal with licenses or cripple-ware. Don’t have the time or patience, that’s so 1990s. Heads will have to roll at microchip but really it seems late, maybe too late? Limiting your market space is almost always a bad idea. It’s hubris.

Well Gerry since my last post, I have a pic chip programmer, and I had a go at making a hex file, and it worked , yes it’s only a flashing led, however my first steps, there are some interesting amateur radio circuits that use pic chips, so this has opened a whole new world for me, and a very exciting world to.

Paul

Hi Paul,

Thats really great. If you have been putting it off for years or have thought you don’t need micro-controllers you have just taken the first step on a journey that will get you wondering how you ever got by without using them 🙂 Good luck, you should have fun

Gerry

If I can only find a program now you write onto, and it turns it into a hex file automatically in one go that would fantastic.

Is that not what the IDE does?

I have no idea, you know that situation when you are in a foreign country ,and have the map , but are still lost , well that’s me 😉

Paul,

I do know that feeling, I had that for a couple of years when I first started to learn to program. It takes a while, but trust me, persist, the light will come on…

Gerry

Hey Gerry,

Your title suckered me in. I find it absolutely amazing that the PIC could have been the Arduino. I cut my teeth on microcontrollers with the PIC. Mainly because they gave me free samples and an assembler to play with. I have never been able to use a C compiler for the PIC as it was always a bit too expensive. Most of my career has been industrial controllers, but there is just something magical about micros. I have Predko’s books on the PIC and have read those cover to cover. So that is a bit of my history. What about today?

Well today I generally use Arduinos and Arduino “like” boards. I have one IDE to learn for the most part. I use Arduino official boards, some Arduino knockoffs, and ARM board called the Teensy 3.1, and the Uno32. So I am using PICs, but only because it has an Arduino type IDE. It is simple and works. The main reason I am using the Uno32 is because I needed micro second timing for a UT system. The Uno32 fit the bill and the price was great. Now I use two Uno32s to control an $8000 UT Mux inside a 2 million dollar robot. However, down the road those Teensy 3.1 ARM boards running at 96MHz just might replace that. Time will tell. The Uno32s “just work” for that application. I don’t remember the last time I even wanted to install a “professional” tool chain for PIC. If it does not work with the MPIDE I think I will pass.

Like you I am saddened by the state of PIC tools. For years I scratched my head and wondered “why”. They are great chips, but are artificially crippled in the market place as new developers reach for tools they used to make a robot when they were 10. Seriously, every kid wants to make cool stuff like that. When they go to engineering or tech school they are not going to pick a PIC. They will use Atmel or ARM. For me I am more of an integrator than a board designer and I choose dev boards I can field for specific purposes. I just bought Yun for a sensor test platform at work, and one for a exercise machine interface to a computer at home. Now Arduino is getting into combining micros with embedded Wifi and Linux. I use embedded PC104 boards with Linux too. So this is so right at home for me. Microchip better get in on this or it will not be the chip of choice for micros like it used to be. I honestly don’t know what will happen to Microchip if it does not see this trend. I started out with PICs and now i professionally use them very little because of the tool chain, not the chips.

Anyway, great article and great objectivity.

P.S. and check out the Teensy 3.1. It has libraries for just about any hobby project and a lot of pro projects. I am really excited about this chip because it has a lot of tools for analyzing audio. Amazingly he keeps the cost of this board down to less than $20. What is really interesting is that his symbiosis with the Arduino project and constant contributions to that project has allowed him to create a market for an Arduino “like” board with significant added value. This would not be possible without free tools IMO.

Hello Gerry,

I had the same problem wit PIC and AVR. It is a shame that Microchip could not keep up with the ever growing Arduino scene.

The main advantage for me is the intergrated USB port at the PIC18F processors. I made a PCB to make it possible to use the Pinguino or Swordfish compiler. This makes it as easy as using the Arduino boards.

Please take a look at my Kickstarter page.

https://www.kickstarter.com/projects/715586821/ping2550-development-pcb-for-pinguino-ide-like-ard

It is a bit like the widely used atmega328 boards. And with a wide choice of bootloaders it is easy to adapt to your own project.

Gerard

Hello Gerry

You are absolutly right. In former projects I mostly programmed the PICs in Assembler so I did not care much about the Compilers. But as Projects get bigger I want to do C++ or at least C and I had already a mess with those Compilers. I even could not compile my latest Microchip demo Software and I did not have time so far to investigate the Compiler issue.

However I would even put more emphasis on that Microchip does NOT deliver an IDE for their products. I would even say, that this is a large risk for the future of the Company. They are really endangering their chip Business in the long term. There is nothing wrong with the Controllers, they are absolutly great in my opinion, but the IDE issue (not having Compilers means they have no IDE) is not acceptable. What the hell? I even considered to pay for the Compilers but I did not, as I would have to pay for 3 Compilers as I do Projects for 8/16/32 Bit!

And the prices of the Compilers, I can only ask “Are you serious?”. 1000 Euro just to compile only the 8 Bit MCUs? You must be kidding me. For less I get Visual Studio and Intel Compiler together. And that are full IDEs with profilers, Debuggers and much more. So not only that everything is extra, but even the prices are way off. If they would have sold a real IDE with Compilers for 100 Euro, yeah not the best but I would even pay.

But even more: You have such a lot of Compiler Options that you are simply off. I wanna do C++? Afaik I would have to pay another 1300 Euro. And I still have the risk that one Compiler just does not fit with a certain library. Again: Microchip has no IDE.

To say it shortly: I am really pissed off by this issue and I want to thank you for your article and to Point this out. Currently all my Projects are on hold on the digital side and I am focusing on the analog parts. But I have to come back to that and I will either use Raspberry or TI if These issues persist. Yeah I am only one developer and may be using only some 10K PIC parts, but I am sure there are more out there who think the same.

First thank you very much for your Videos. They are absolutly great and have a very large benefit for experienced watchers. I like your Video blog significantly more than that of Dave Jones – which I like as well!

Hello Hans,

Thank you very much for your comments and feedback. I agree with your frustrations in relation to Microchip, they should look at what they are doing in this regard but so far they have maintained the same position so I am not expecting a change any time soon. You are welcome with regards to the video blog I am glad that you find it useful.

Regards,

Gerry

Interesting article. Though a bit long as you said.. I was heavily into Microchip from 1997 until around 2004, also as an official consultant, and did lots of different projects, but there were always one thing that really lacked, compilers and IDE. I mean during this period you couldn’t even do proper c source level debugging… try to watch e.g. something basic like a struct. Ranting and complaining to microchip gave little, and if I remember correctly Microchip provided only the old Win16(!) application until around 2002, long after Win95 and 2000. And MPLAB32 was beta material for many years. So I told them f# off and switched to Atmel (and MSP430 partly), never looked back! Atmel tools have always been far ahead, and the package they provide today with good proper compilers and a very good IDE, well, Microchip sure can’t beat them.

Flashback to 1998 I was doing some PIC17 work, Microchip had taken over another compiler company if I remember correctly a year or so in advance, and of course were charging money for their compiler.. but the compiler was so rotten! You wouldn’t imagine! Man I struggled. I asked them how the heck they can charge us money for this bug-ridden shit, but got only the normal Microchip way of answering… it will get better… well it took quite a few years… 8051 tools at that time was paradise compared.

So yes, Microchip have always treated their development tools like something they don’t really care for… It’s really strange. But I don’t care for them at all anymore either, they caused me too many problems and didn’t listen at all to requests and inputs. My outlook for Microchip is not very positive as they still are not working with ARM cores and still don’t care about tools. My current employer, a large 100.000+ employees company have also kicked them out due to that fact.

Of course did some occasional work on pics up until now also so got some idea of the current status but, well, the tools are still inferior to that of e.g Atmel.

Hi Thomas, thanks for the comments, you are not alone in your thinking. I still like Microchip parts and they are still my go-to micro mainly because I have not had the incentive to switch to something else yet. The tools they have work well now (apart from in-chip debugging which is flakey at best), but other than that the latest IDE and compilers are nice, albeit expensive for the optimised versions which is a shame. Thanks for the comments and for reading. Gerry

Excellent article. Thanks. Just like Thomas, I was heavily into Microchip from 1990’s until around 2004. I was not pleased with the state of MPLAB or Hitech at the time so I opted for CCS-C until constant update costs became ridiculous. In my search for affordable options I found out about the Atmel solution… since then, my box of Microchip parts remains untouched. You nailed it. The Microchip parts are great to work with but the tools and related “pay the toll” approach to software IDE’s and compilers are oppressive and burdensome. It made the pro-Atmel decision for me quite easy.

Based on history though, I also see no sign that Microchip will change. General Motors thought they were number 1 as well and felt that they did not have to change how they operated because everything was “just fine”… and then… it wasn’t.

Hi Pete,

Yes absolutely spot on, Microchip will one day realise this too…hopefully it will not be too late when they do. Thanks for the comment and for reading the article.

Gerry

That’s really disappointing that Microchip doesn’t have completely open tools even with its PIC32. The PIC32 line is really nice, MIPS architecture, single cycle execution and all the features you’d find in a pic. Without a completely unpropriatary no-worries toolchain it just doesn’t have that value of not spending a bunch of time and money trying to program the things.

Hi Aaron, yes I completely agree, thanks for your comments. Gerry

I’ve been using PIC 18f14k50’s with open source JAL – http://www.justanotherlanguage.org/

for a number of years. Teaching middle/ high school kids electronics/ programming/ robotics. The PICS/ JAL work really well & inexpensive. JAL has a pretty good set of open source libraries that are easy to use. Good support community. Could be greatly expanded.

Take note Microchip!

I’ve bought 100’s of your devices but steer clear of Microchip compilers & IDES which are not beginner or hobbyist friendly.

Hi John,

Thanks for your comments and link to the JAL open source project.

Gerry

The only thing to like about MCHP is they still make parts in DIP packages. If not for that I would jump to Atmel in a second.

I thought Atmel also make parts in DIP packages, I understand the difficulty in switching, its a big learning curve. I have been designing a project around a PIC32 and I have come across problems that I cant seem to get any help with, so that coupled with Microchip’s dumb tooling strategy has been enough for me to move the project to an ARM based part, the learning curve is steep but I hope it will be worth it. I have always assumed that Microchip were leading the pack when it comes to on-board modules but some research and it turns out not to be the case at all, in fact they seem to be well behind the curve in some areas. I hope to blog about my experience when I have done enough to have something useful to say. Thanks for the comments.

Gerry

Atmel makes 8 bit dip parts but I want at least 16 bit performance. Hey Atmel put one of your 32 parts in a Dip package for me and I’m on board.

Thanks for the post, and for many interesting comments.

I agree with you entirely on using PIC microcontrollers. I evaluated the others as I got started with these amazing electronic machines and PIC seemed to stand out above the rest. I’m glad that there are people out there who do like the C-compilers, though I am not one of those people. I use the assembly language and it was extremely simple for me to learn. To me, it was very much like the BASIC programming language that I used in the 80’s on a IBM PC Jr. and even saving my programs to a cassette recorder since we did not have a disk drive yet. I like knowing exactly what is happening at all times which is very important in high speed chip to chip communications, etc. Thanks for your great insights. The transition to MPLAB X has been difficult and I don’t like it. The only reason that I need to use it right now is that MPLAB 8 doesn’t support the 12LF1571. Things are just harder to find and use, that’s my opinion anyway [I don’t like change ;-)]. Happy programming!

As someone that is brand new I can definitely relate to this:

“IDE stands for Integrated Development ENVIRONMENT, and any development ENVIRONMENT is incomplete in the absence of a compiler”

I assumed that the compiler would be installed so when I got missing compiler errors I was pretty surprised. They (Microchip) have a great example of a successful business model that they could follow. The Arduino took the Maker movement by storm. Microchip could have led that storm had they paid attention

Hi Mike,

In fairness to Microchip they do provide the compilers so you just have to know to install them too, given how complicated it could be, they have made it reasonably simple, despite the obvious commercial game they have to play with the modular approach. Their XC8 XC32 series compilers are pretty good, even the unoptimised free version, I certainly have not hit any blockers using them. I do agree though, they should have paid more attention to the maker market, well at least if they wanted that market, it maybe theri business model does not need that business. Thanks for the comments.

Gerry

Hi there I am so excited I found your site, I really found you by error, while

I was searching on Digg for something else, Regardless

I am here now and would just like to say thanks for

a remarkable post and a all round enjoyable blog (I also love the theme/design), I don’t have time to look over it all at the minute but

I have saved it and also added your RSS feeds, soo when I have time

I will be back to read a great deal more, Please do keep

up the superb work.

Hi Cathryn,

Thank you for your comments and feedback. I have been a little quiet on the blog front for a while now but hope to get back to it real soon.

Gerry

Hello,

I would not recommend avr32. it’s support in GCC is spotty, Atmel seems not to upstream well their changes.

Also the support for cross platforms programming tools is nearly non existent. I had to hack some binaries out of old versions of the official atmel toolchain, because the new version does not support linux any more. Disgusting.

Also, their libraries are very very poorly written, they seem to use a team of ever changing interns, or whatever.

Well, Every silicon has pros and cons.

AVR 8-bit being very fast in execution can give us more bandwidth to do other stuff in application. The tools are nice but AVR on chip peripherals need improvement. And the toolchain requires something like one click installation with frequent updates.

PIC 8-bit on other hand runs slow, have only one working register, lots of register swapping, and so we get slower 8-bit counterpart. But what lacks in core is compensated with on chip peripherals. ANd they r great, i was able to do single loop motor control for BLDC, also app notes like AN1980 shows how peripherals changed that. The instruction set for PIC 8-bit is also very optimized and only few required to do complex tasks.

PIC32 is great MCU too, comes in variety of package, high speed, have floating point unit, 5 stage pipeline, single cycle execution, reaching almost 330 DMIPS (and it reaches that performance). I did some complex multi loop control system design and state space design model (which i cannot explain here) did good on PIC32 MIPS.

ARM on other hand is great too with cortex-M series.

Yes, i do agree, the code size increases with free MCHP compiler with very small performance degradation (actually very small performance dip) but if ur application is fine with it, im fine with it. Optimization does reduce code size by 25% and the execution speed increases a bit. And this is disappointing. But every company is here to earn money. Some are greedy and some are not. But MCHP is not that greedy to make bad compilers. I never required to go for C++ honestly. C is more than enough.

I disagree with some of ur rants. Its like whinning. Simply put, every MCU has pros and cons. use what ur application suits best. Obviously when comes the hobbyst, they look for performance, but i know hobbysts who look for simplicity like, get one click tools, install and play.

Honestly, MPLAB X is great compiler and if u and ur application can leave with less optimized code, then whatever u use does not make a difference. But if u need more performance with free compiler go for ARM. For me, Im able to achieve all the performance with dsPIC and PIC32.

Currently, im looking out for ARM but the tool download and installation is way too involved, one has to go and do research to get all tools, its not one click install.

Yep, I agree with much of what you say. I did say right at the start of the article that I was not trying to create a flame war for various architecture fanboys. I think the overall point you make is a good one – different jobs have different requirements, so pick what works best for you. I tend to go with PIC because I know them and I know the tools, warts and all, so for me I have a workflow that works. I too have messed around with other toolchains and I don’t much like the ones I have tried. I like to rant sometimes – don’t we all 🙂

There are three reasons for prefer PIC over any other MCU (any vendor, not only Atmel): 1) You don’t known any MCUs beyond Microchip’s; 2) You work for Microchip and you are advertising your employer’s products; 3) You are masochistic enough to stay with an awful architecture when you have so many better alternatives (years-light better).

Yep, everyone is entitled to an opinion of course. I have other good reasons to work with PIC’s, despite having also worked on at least one project for each of MPS430, Tiva TM4C129, Atmel ATMEGA, ARM and 8051 derivatives, I still us PIC for my jellybean needs. I don’t think PIC’s are perfect but they are useful, cheap and easy to use once you know what do do with them – that of course though is just my own personal preference.

Gerry

and there:

http://hackaday.com/2016/01/20/microchip-to-acquire-atmel-for-3-56-billion/

Microchip acquired Atmel…

http://www.microchip.com/docs/default-source/announcements-documents/atmel-customer-letter-april-2016.pdf?utm_campaign=May_2016_eNewsletter_A&utm_medium=email&utm_source=Eloqua

So what’s next? PICMega? ATPIC?

That was certainly one way of solving the problem. Its not really a surprise, will be interesting to see how they ultimately amalgamate the product families. PICDuino maybe 🙂

Gerry

Very interesting article. As a newbie to microcontrollers I opted to start with Arduino for its ease of use, free IDE, etc. Indeed, many of the points you raised, with the intent of MAYBE trying PICs later on. Now that MicroChip has purchased Atmel, what’s your guess on what they’ll do? Are they going to stick with their closed source mindset (ala Microshit) as opposed to open source? If so, it doesn’t sound encouraging for the open source hobbyist/Maker community that has made the Arduino so popular. Reminds me of all the other examples of American businesses that have missed the boat over the years because of their limited (limiting) perspectives (tranistorized radios, TVs, etc. that the Japanese and Koreans cleaned up on – the home VCR, etc., etc., ad nauseum). Without reading through all of the extensive, it doesn’t appear that anyone at MicroChip has ever responded to you as Atmel did. I’m sorely tempted to try to find a way to to publicly shove your article in MicroChip’s face, and CHALLENGE them to respond!

Hi Doug,

I think they made the move to acquire Atmel simply because they failed to take the maker community by storm themselves, in that regard the Atmel team (quite possibly though good fortune) became the hardware that is now synonymous with open hardware – Arduino. Given Microchip had the commercial capability to simply acquire Atmel then you cant really knock them for that, I think that was a defensive and probably wise move on the part of Microchip because Arduino based solutions were starting to appear in commercial products as the school leavers and makers took their knowhow into commercial environments. I very much doubt Microchip will close off the Atmel stuff at all, I think the reason they acquired is to get themselves into the open hardware/open source space and deal with the fact that PIC market share was being eaten away at by Atmel devices.

I expect internally for them its going to be a very tough integration, two groups of people with a different approach and different mindsets will take some effort and some time to smooth out. M&A’s are always difficult so if the intention of Microchip is to combine the two groups we can expect a year or so of not very much happening while they focus internally and sort themselves out. I imagine if they run the two companies independently then you can probably expect more of the same from both organisations. There are definitely economic advantages to combining fabrication of their chips, so I imagine part of their strategy would be to consolidate and reduce costs.

Thanks for your comments.

Gerry

Feel so relieved reading this. Thought I am the only one who has that impression about microchip. I started with pic but just had to move to avr for these obvious reasons. I still use pic once in a blue moon though. Bad tooling.microchip

I have been using PICs for many years, and I simply love them, however the MPLAB X platform must easily be the most frustrating platform that I have ever used. In a nutshell, it is completely unpredictable, the simulator is a piece of shit, the debugger is only half functional, the menu system is stupidity itself, I am also thinking of looking elsewhere for more user friendly and functional controllers with intelligent development platforms.

I’m surprised they do not know how to use a medicine to make their compilers work with optimization, microchip does not worry about avoiding this, leave the crying and learn to use the tool.