I have set the regulator aside for a while, its time to turn to the control circuitry. However, before we get into the circuitry we need to do some pretty important calculations to help us select the right parts for the job. If you recall one of the aims for the power supply module is to be able to set its personality (supported voltage and current range) to suit a variety of voltage ranges. I have chosen a number of ranges to work within and specified them in the table below. Obviously its a relatively trivial task to add other ranges in the future so long as we stay within the confines of the working ranges of the components we have used in the system.

| Range | Volt Range |

Volt Resolution |

Volt Steps |

Current Range |

Current Resolution |

Current Steps |

Approximate Power |

Regulator Gain |

|---|---|---|---|---|---|---|---|---|

| A | 0-6v | 1mV | 6000 | 0-5A | 1mA | 5000 | 30W | x2 |

| B | 0-16V | 5mV | 3200 | 0-2A | 1mA | 2000 | 40W | x5 |

| C | 0-20V | 5mV | 4000 | 0-2A | 1mA | 2000 | 40W | x10 |

| D | 0-30V | 10mV | 3000 | 0-1A | 1mA | 1000 | 30W | x10 |

The values in the table above are what we use to establish the correct control circuitry so they probably deserve a bit of explanation. The voltage and current range columns speak for themselves, they tell us what the expected programmable range of the regulator will be. The voltage and current resolution columns tell us the size of each step in the range, so for example in range “A” which has a resolution of 1mv/1mA we can program the output volts from 0V to 6V in 1mV steps, and for the current we can program the limit from 0A to 5A in 1mA steps. The volts and current steps columns tell us how many digital codes are needed to represent the desired output voltage and resolution.

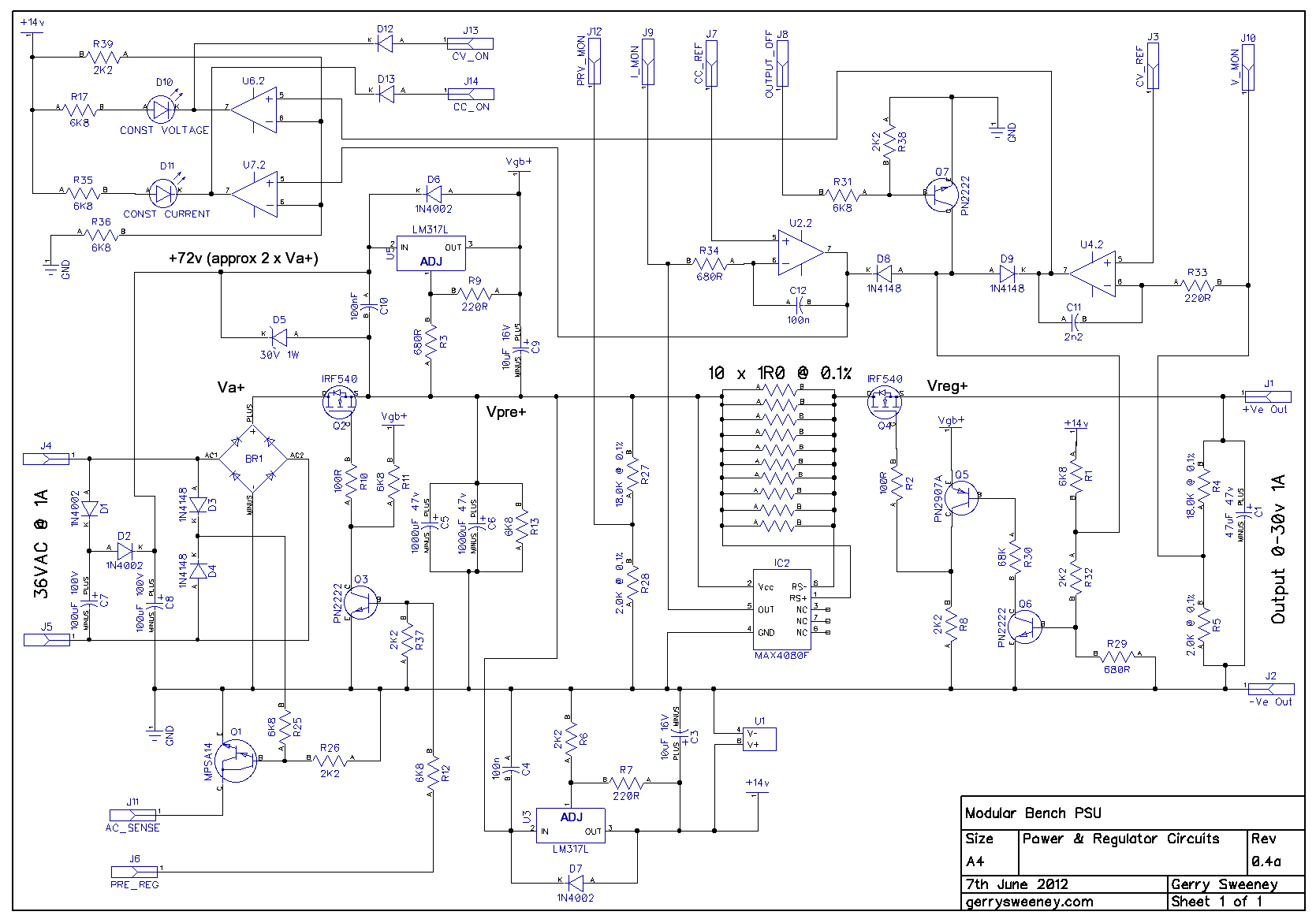

Getting our Voltage under Control

In oder to get a digitally controlled voltage we need to use a DAC (Digital to Analog Converter). A DAC takes a digital “code” and translates this directly to a static voltage. However, DAC’s typically operate in the 3.3v to 5.5v range so to control higher voltage ranges we need to ensure that the regulator circuit has gain. This is achieved by simply dividing the measured output voltage using two precision resistors before feeding back into the error amplifier. The column called “Regulator Gain” shows the gain factor required for the specified voltage range. You will see in the Part 4 schematic the gain has been set to x10 by R4 and R5. It is also with noting that these two resistors are the only two components in the entire regulator circuit that have any impact on the accuracy of the programmed output. The effects of inaccuracies in the other components are automatically and fully compensated for by the DC Servo effect created by the control loop. It is critical that R4 and R5 are accurate (I am using 0.1% tolerance resistors) and also have good temperature stability.

Alright, get to the point, what about the DAC!

In order to select the right DAC resolution and voltage reference (more on that later) we need to establish the range and resolution of the required control voltage. This is important because we must include the gain of the regulator which in turns affects the resolution requirements of the DAC. The following table expands on this with a key values we need to consider.

| Range | Output Range |

Resolution | Steps | Regulator Gain |

Reference Range |

Reference Resolution |

|---|---|---|---|---|---|---|

| A | 0-6v | 1mV | 6000 | x2 | 0-3v | 500µV |

| B | 0-16V | 5mV | 3200 | x5 | 0-3.2v | 1mV |

| C | 0-20V | 5mV | 4000 | x10 | 0-2V | 500µV |

| D | 0-30V | 10mV | 3000 | x10 | 0-3V | 1mV |

We calculate the control voltage range by dividing the target voltage by the gain multiplier value. For example, on range A, in order to obtain an output voltage of 6V we need a control voltage of 3V, calculated as 6 ÷ 3 = 2. To work out the reference control voltage resolution required we simply take the maximum controlling reference voltage needed and divide it by the number of steps required, in this case its 500µV per step.

From the above table we can clearly see that we need a maximum of 6000 steps to cover all ranges, which requires a DAC resolution of at least 13-bits. That is really inconvenient because DAC’s increase in price as the bits go up and there is a very notable jump in price as you go above 12-bits. A potential solution to this problem is to increase the step size on the 0-6v range from 1mV to 2mV which would half the resolution but would bring me nicely into range for a 12-bit DAC but I did not want to do that, its not what I set out to do, I needed to maintain the target resolution of 1mV on the 6v range. Thinking about it some more I thought that I could probably increase the resolution of the DAC in software using a technique whereby the DAC output is continuously set to a series of values at high speed to create an average voltage that can sit between between steps, this is known as dithering or modulating the DAC output. The downside to doing this is you introduce a peak ripple which reflects the continuously changing voltage level. However, filtering this out a straightforward affair because the amplitude of the ripple created is actually very small, so as long as we have enough current drive from the DAC a simple second-order passive low-pass filter is all that should be needed. Something worth noting though – the amplitude of the ripple voltage as an overall percentage of the output voltage is not linear; at lower voltages the ripple constitutes a larger proportion of the overall signal and therefore introduces more error. In practice though this should still end up well within tolerance, and if not we can always put in a higher resolution DAC!

One final thing is we need to decide on a reference voltage to use. DAC’s require a reference voltage in order to provide an accurate output voltage. Think of a DAC as a programmable potentiometer where the output is the wiper, the reference voltage is strapped across the two outer pins and the code sets the position of the potentiometer. If we have a 12-bit DAC that gives us 4,096 steps so if we use a reference voltage of exactly 4.096V we will have a theoretical output of 0-4.096V in 1mV steps which are nice round numbers that make software easier to develop, so that’s what we will go for.

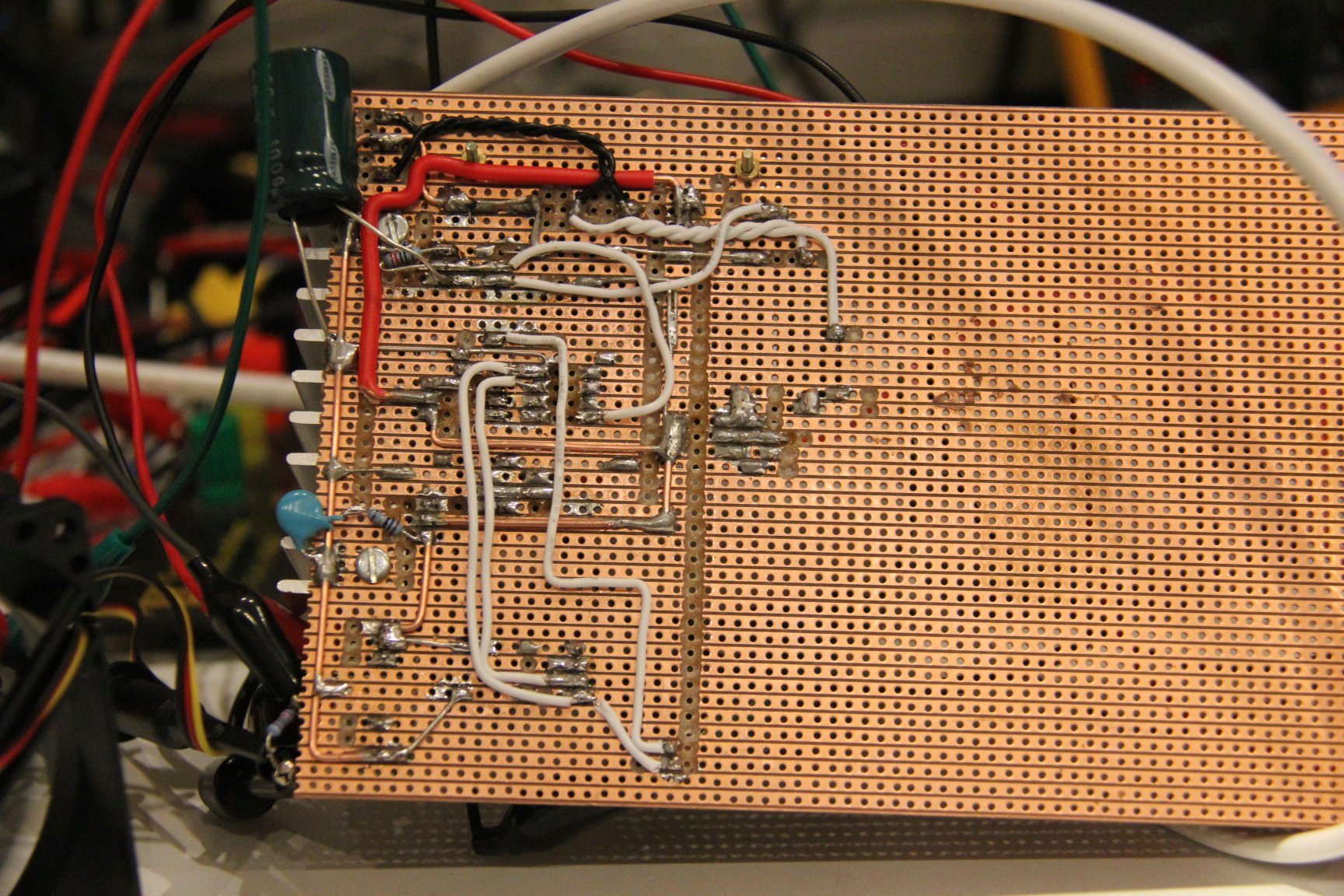

So in summary, we have a design plan, we have our calculations and we know we need at least a 12-bit DAC. I am going to start with a DAC from Microchip MCP4822 which is a dual 12-bit DAC, its low cost and seems a reasonable starting point to do some experimenting, it also has a built in 2.048v reference and a x2 amplifier giving us the 0-4.096v @ 1mV steps.

In Part 6 I will work up a circuit, get a micro controller talking to the DAC chip and do some some perormance measurements to see what we come up with.

This content is published under the Attribution-Noncommercial-Share Alike 3.0 Unported license.